How to enable ad-hoc analysis for operators

The pain: Why can't I just double-click into the data?

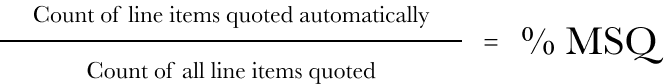

As a Product Manager for the early Marketplace product at Flexport, my team’s goal was to drive quoting automation. We measured our progress towards this goal via a metric called Marketplace Share of Quote (MSQ).

Our team’s goal was to drive this metric to 100%. With more line items automatically available in the Marketplace, Flexport's operations teams could assemble and share quotes faster, leading to faster bookings.

However, understanding why this metric rose or fell required going layers into the data behind the charts. There could be several reasons why an employee was manually quoting certain line items: the type of goods involved, the shipment's route, or even a client's unique preferences.

To see the correlation between these causes and their effect on the metric, I could try writing complicated SQL, which could take hours for each question. Or I could ask my data team for help which might take days or weeks for the data team to prioritize each time I had a question. Or I could export the data to spreadsheets which is somewhat faster but non-repeatable and near impossible for the data team to debug if I had follow-up questions.

Operators and data teams feel both sides of this pain the world over. So what’s the core problem, and how do we solve it?

The problem: The questions have changed. Tools and teams haven’t caught up.

Most questions like “How’s revenue doing in this region?” or “What’s our average issue response time?” are quickly answered in the dashboards of Salesforce, Zendesk, and BI tools.

Instead, operators are now asking, “Which of our paid social channels are driving revenue in this segment in this region?” or “How much sooner are customers with above-average issue response times upgrading to our enterprise plan?”

Operators can try to answer these questions with enough spreadsheet sweat. But then they have to answer them repeatedly by exporting fresh data each week and adjusting their brittle references.

Data teams can repeatedly answer these questions with SQL, as done in the MSQ example above until it makes sense to create data models that answer the questions elegantly and flexibly. But even then, the interfaces of most data tools aren’t flexible enough to support the myriad of questions operators have. For example, how would an operator who doesn’t know SQL be able to combine a support tickets model with a subscriptions model?

Because of this, teams remain stuck in data exploration purgatory, repeatedly asking for new joins of different tables with more attributes to slice and dice. This relationship leads to frustrated operators, burnt-out data teams, and slower, less data-driven organizations.

SaaS tools and BI tools have been successful in helping teams align around KPIs over the past decade. But to answer the next decade’s questions, our data tools and data culture must evolve.

The solution: Introducing data exploration and how to enable it for operators

To solve this hairy problem, we need to create an environment for more efficient data exploration. This environment requires modeled data, interfaces for power users, and helpful documentation.

Data transformation enables flexibility

At its core, data exploration is analyzing raw data to develop new insights. Operators will often request to see raw data, but they typically need some transformation and cleanup before looking at it. Without transformation, operators may see poorly formatted data littered with test and duplicate records. Even after it's cleaned up, most of this data will still not contain enough of the associated attributes operators want to slice and dice to make insights.

Instead of repeatedly providing SQL queries for business teams to export to CSV and explore further, data teams are increasingly providing modeled data. By modeling data using techniques like the “One Big Table” approach, data teams can preconfigure "wide tables" (i.e. tables with many columns) that combine tables of related data, say customers and orders, into one table.

Operators can then use these wide tables to easily slice and dice across various attributes, build pivot tables and create visualizations. Because the visualizations and pivots are constructed from the same source, operators can then "double-click" into the chart to see the underlying aggregations and row-level data. More transformed data leads to more independent analysis for operators and fewer ad-hoc requests for data teams.

Power users deserve powerful interfaces

Even as data transformation best practices continue to become mainstream, operators still need an efficient interface to easily explore these transformed tables. Instead of trying office hours and staring at W3 schools tutorials to learn SQL, why not give operators an intuitive way to explore without exporting the data to CSV?

BI tools have gotten increasingly better over the past decade at providing visual SQL interfaces for non-technical teams but I've always found these tools limiting for deep exploration. In-depth analysis requires more advanced functionality that a couple of pretty charts and pre-defined filters. Operators need calculated fields, ways to join, pivot tables, and more. The rise of spreadsheet-based BI tools and no-code, visual SQL tools gives hope to operators looking to use their skills to quickly explore real-time data.

Arm operators with knowledge

With interfaces that allow for advanced exploration, operators still struggle to understand the data model and what each table and column consists of. Traditionally, data teams have tried to invest in manually maintained documentation in Confluence, Notion, or a Google site. These efforts typically fall short as the documentation lives in separate systems away from the BI tool, where only the most determined operators will venture to find the information they need. Worse, data teams tend to forget to update the documentation as they push out continual changes to the data model, creating a kind of broken window environment for documentation.

To fill this context gap, data teams should invest in providing automated data catalogs that meet the operators where the analysis happens, not in a manually maintained wiki.

Together, transformed data, no-code interfaces, and accurate, contextual documentation help operators quickly answer their questions without waiting for answers or needing to manually export to CSV. Data teams receive fewer ad-hoc questions and can focus on more strategic work, leading to less burnout and more fulfilled teams.

More considerations for the future of data exploration

As the world of reporting and analytics moves beyond the dashboard and towards exploration, data teams will be faced with more familiar problems that need to be solved in new, automated ways.

Creating a metrics layer

Data teams will need faster, scalable ways to model data and prepare it for operators to independently explore. The metrics layer is a hot topic in the data world these days, as this is one of the best bets for filling the current gap between raw data and the metrics that operators want to consistently explore.

Powering positive feedback loops

With tools that finally help operators explore data independently in an online system, data teams will need insights into how what exactly these operators are doing. Better visibility and faster collaboration between teams will break down current silos and make it easier for data teams to build the right thing at the right time.

Maintaining governance

Traditional request and response workflows should decline as operators as data access continues to be democratized. This means that data teams can't obfuscate sensitive data contained in the output of their ad-hoc response. Instead, data teams must invest in systems that enforce proper role-based access control and help stakeholders share insights with secure guardrails.

Introducing Canvas, a new way for operators to explore data

At Canvas, we’re thrilled to be working on the future of data exploration for operators. Canvas is a collaborative data exploration tool for operators to make decisions without SQL. Unlike traditional BI tools, Canvas has:

- A spreadsheet-like interface for your teams to pivot, write formulas, join, and create charts without SQL

- Figma-like collaboration lets your teams communicate where the data lives, while usage metrics inform data teams of who is using specific models

- A native dbt integration so your data models and documentation about the tables and columns are automatically synced and surfaced directly to operators

As a founder, I'm building the product I wanted years ago as a PM, spending hours diving into the data behind the MSQ chart. If you feel those pains, head to canvasapp.com/signup and let me know what you think.